Experimenting with SeaweedFS

I ran it locally on a MacOS with docker desktop

First Pass

Launch the server:

docker run --rm -p 8333:8333 chrislusf/seaweedfs server -s3

Commands:

aws s3 mb s3://test/ --endpoint-url http://localhost:8333 --no-sign-request

echo "This is a test" > ./test.txt

aws s3 cp ./test.txt s3://test/folder/test.txt --endpoint-url http://localhost:8333 --no-sign-request

Using any browser, you can get your file at http://localhost:8333/test/folder/test.txt

curl http://localhost:8333/test/folder/test.txt

Second Pass

Using docker-compose.yml provided from the seaweedfs repository, https://github.com/seaweedfs/seaweedfs/blob/master/docker/seaweedfs-compose.yml

I’ve updated it a bit: https://webuxlab.com/content/devops/seaweedfs/docker-compose.yml,

The other configurations:

- https://webuxlab.com/content/devops/seaweedfs/s3.json

- https://webuxlab.com/content/devops/seaweedfs/fluent.json

- https://webuxlab.com/content/devops/seaweedfs/prometheus/prometheus.yml

docker compose up

Check the cluster status:

curl "http://localhost:9333/cluster/status?pretty=y"

curl "http://localhost:9333/dir/status?pretty=y"

curl "http://localhost:9333/vol/status?pretty=y"

Testing with S3

See commands above for testing (Transfering files and etc.).

The configuration : https://github.com/seaweedfs/seaweedfs/wiki/Amazon-S3-API Presigned URL : https://github.com/seaweedfs/seaweedfs/wiki/AWS-CLI-with-SeaweedFS#presigned-url Development with NodeJS and S3 : https://github.com/seaweedfs/seaweedfs/wiki/nodejs-with-Seaweed-S3 The Commands: https://github.com/seaweedfs/seaweedfs/wiki/AWS-CLI-with-SeaweedFS

Once using the Default credentials, you can set the keys to send the commands:

export AWS_ACCESS_KEY_ID=some_access_key1

export AWS_SECRET_ACCESS_KEY=some_secret_key1

aws --endpoint-url http://localhost:8333 s3 ls

aws --endpoint-url http://localhost:8333 s3 ls s3://test

aws --endpoint-url http://localhost:8333 s3 mb s3://test-1

aws --endpoint-url http://localhost:8333 s3 mb s3://test-2

aws --endpoint-url http://localhost:8333 s3 mb s3://test-3

aws --endpoint-url http://localhost:8333 s3 mb s3://test-4

aws --endpoint-url http://localhost:8333 s3 mb s3://test-5

aws --endpoint-url http://localhost:8333 s3 mb s3://test-6

aws --endpoint-url http://localhost:8333 s3 mb s3://test-7

aws --endpoint-url http://localhost:8333 s3 mb s3://test-8

aws --endpoint-url http://localhost:8333 s3 mb s3://test-9

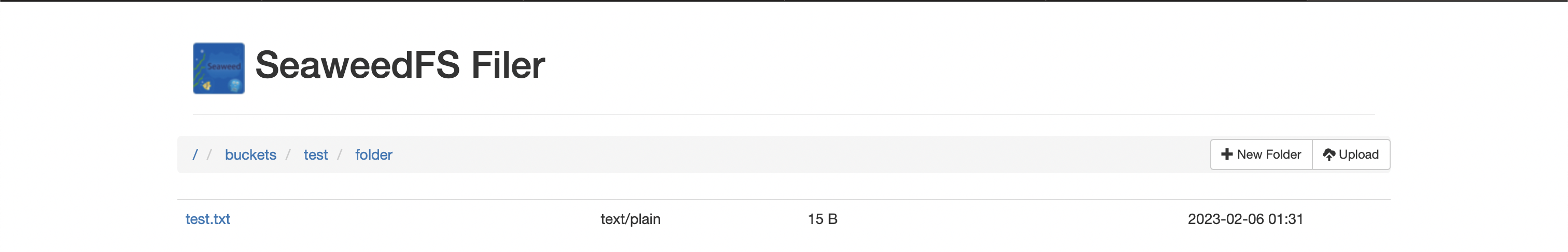

Filer

You can navigate the buckets from your browser at localhost:8888

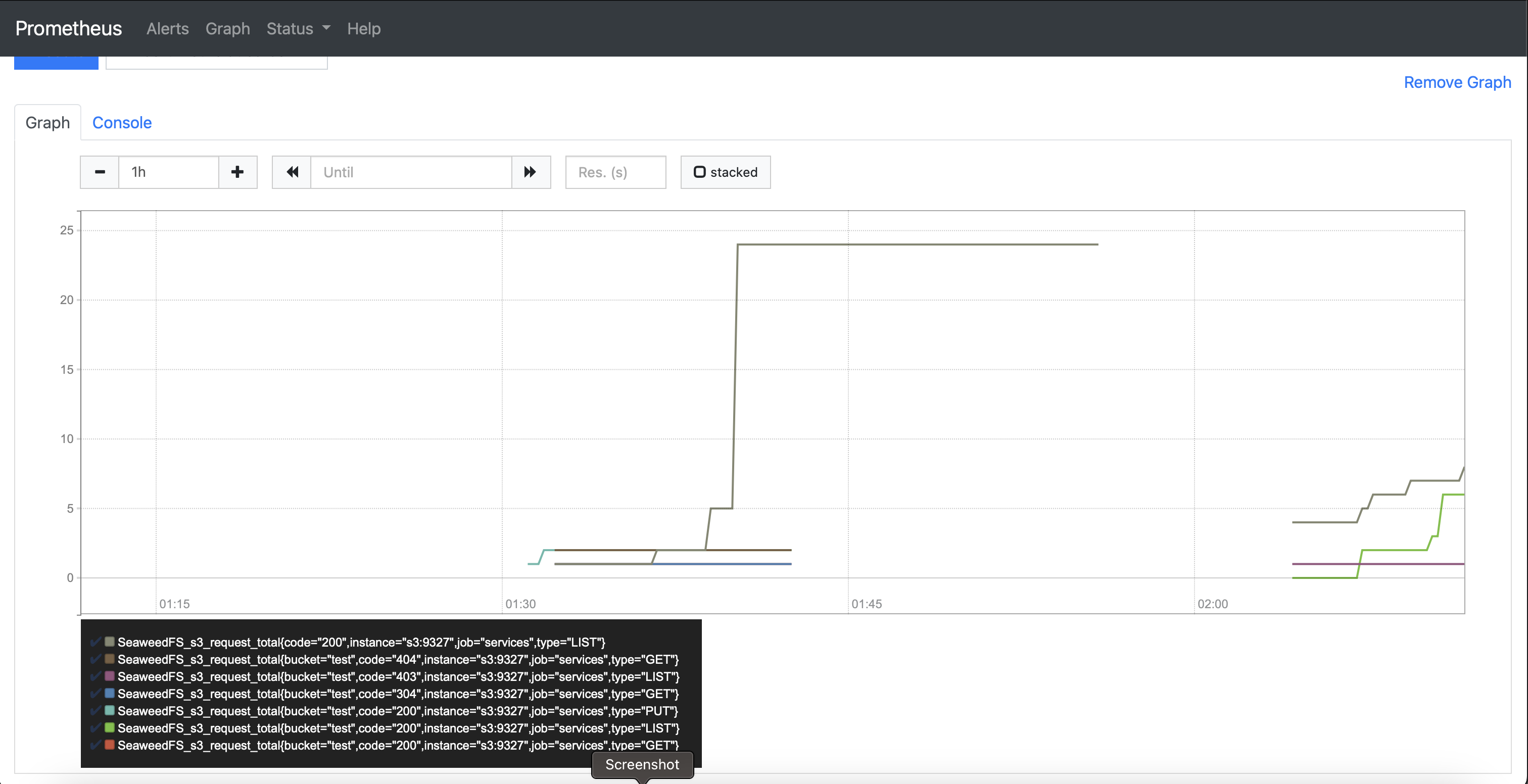

Prometheus

Query Example: http://localhost:9000/graph?g0.range_input=1h&g0.expr=SeaweedFS_s3_request_total&g0.tab=0

Fun with bash

export AWS_ACCESS_KEY_ID=some_access_key1

export AWS_SECRET_ACCESS_KEY=some_secret_key1

mkdir -p /tmp/dummy

pushd /tmp/dummy

for (( a=1; a<=9; a++ ))

do

mkdir -p d$a

for (( c=1; c<=10; c++ ))

do

echo "Hi, $(( $RANDOM % 50 + 1 ))" >> d$a/dummy_${c}_xyz.log

done

r=$(( $RANDOM % 1000 + 1 ))

dd if=/dev/urandom of=d$a/data.bin bs=${r}M count=1

echo "File generated..."

aws --endpoint-url http://localhost:8333 s3 cp ./d$a/ s3://test-$a/ --recursive --exclude "*" --include "*.bin" --include "*_xyz.log"

done

popd

rm -rf /tmp/dummy/

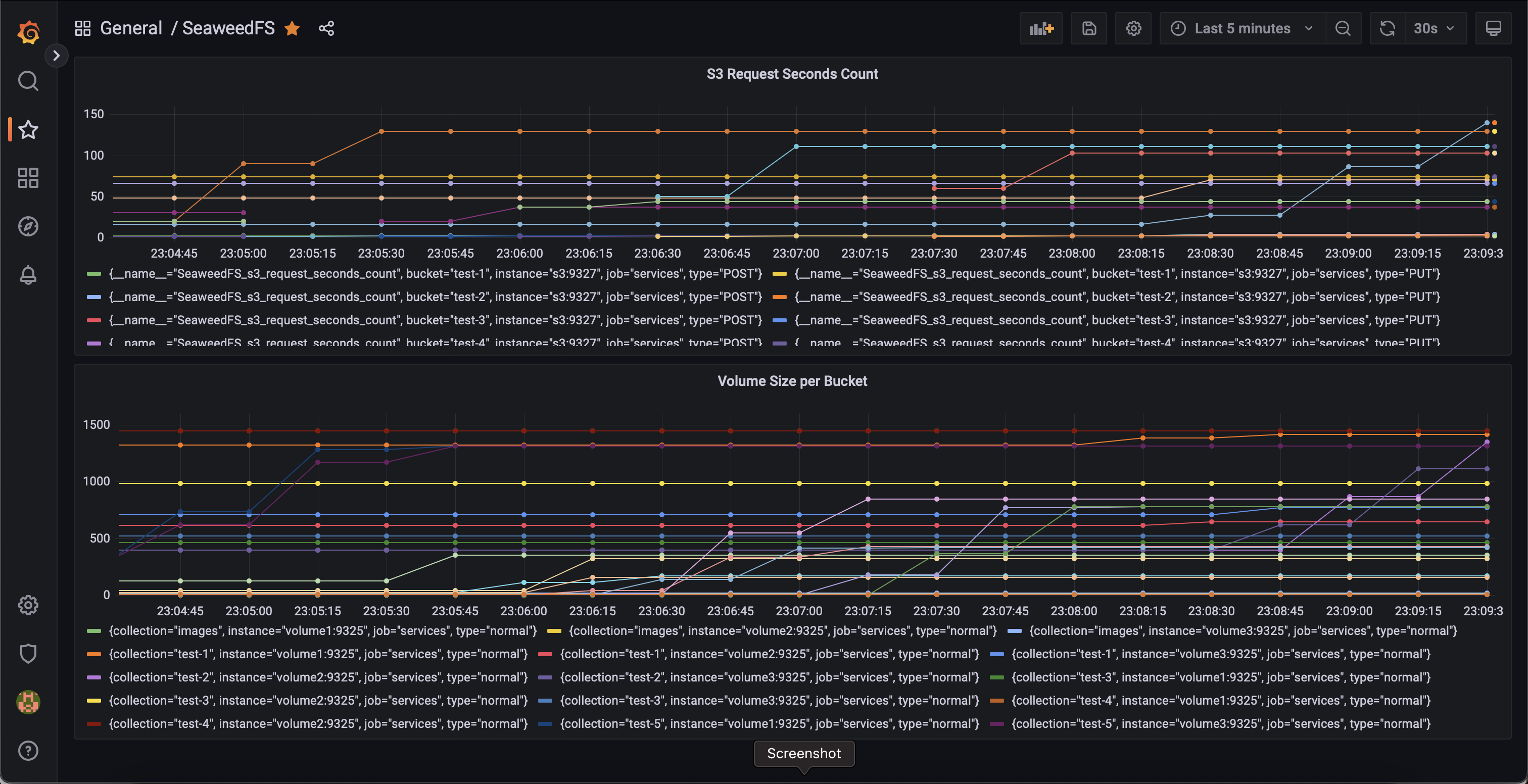

Grafana